Up to this point in our AI blog series, I have been discussing (some might say ragging on) the practical implementational challenges of AI in the nonprofit sector. In today’s blog, I’m shifting the focus on a more global issue: Is using AI environmentally sustainable?

There is no getting around it. AI uses a lot of electricity, and in turn, a lot of water. A lot.

If you want to see the details on how AI uses electricity and water, read what Copilot, Microsoft’s AI, says on the topic (in italics below). There is much to say on the topic.

The bottom line is that while we tend to think of these AI tools as free, they aren’t. There is a significant environmental impact. Cooling the data centers that power these systems requires vast quantities of water. And the more we use these AI tools, the greater impact they will have on our world.

Now, a lot about how we live has a negative impact on our environment: driving our combustible engine car, running the AC, the way we produce food. . . it goes on and on. So, is one more straw (adding AI’s water and energy demands) on the camel’s back going to make any difference? Could it be the straw that breaks it?

Perhaps. Perhaps not.

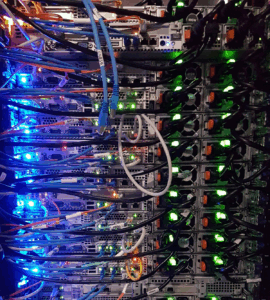

Photo by Massimo Botturi on Unsplash

The Environmental Impact of Artificial Intelligence: Water and Electricity Consumption

Understanding the Resource Demands of Modern AI Technologies

Artificial Intelligence (AI), particularly in its most advanced forms, has rapidly transformed industries, economies, and societies worldwide. From powering voice assistants to enabling autonomous vehicles and revolutionizing medical diagnostics, AI is now woven into the fabric of daily life. However, behind the seamless user experiences lies a complex, resource-intensive infrastructure. The development, training, and deployment of AI models require significant amounts of electricity and water. This document explores the scope and scale of AI’s water and electricity consumption, the factors influencing these needs, and the implications for sustainability.

- The Architecture of AI: Why Does It Consume So Much?

Modern AI models, especially those utilizing deep learning, rely on massive datasets and complex neural networks. Training these models is computationally intensive, often requiring the use of high-performance graphics processing units (GPUs) or specialized hardware like Tensor Processing Units (TPUs). The models themselves can contain billions, or even trillions, of parameters. Running these computations requires vast data centers—the digital “factories” of the AI age.

These data centers are the primary sites of AI’s resource consumption. They draw electricity to power the hardware and dissipate the generated heat, while water is used in cooling systems to maintain optimal operating temperatures.

- Electricity Consumption of AI

2.1. Model Training

Training state-of-the-art AI models is an energy-intensive process. For example, the training of OpenAI’s GPT-3, which has 175 billion parameters, was estimated to consume hundreds of megawatt-hours (MWh) of electricity. A 2019 study by the University of Massachusetts Amherst found that training a large natural language processing (NLP) model can consume as much electricity as several average American homes use in a year.

Key factors influencing electricity usage include:

- Scale of the Model: Larger models require more computations, thus more electricity.

- Hardware Efficiency: More efficient chips can lower total consumption, but overall industry trends show increasing resource demand as models grow.

- Training Duration: Some models are trained for weeks or months, multiplying their energy consumption.

- Location: Data center location affects the carbon footprint, depending on the local energy mix (renewable vs. fossil fuel).

2.2. Model Inference and Deployment

While training is the most energy-intensive phase, running AI models (inference) also requires substantial electricity. Services like real-time language translation, recommendation systems, or image recognition process millions or billions of requests per day, collectively using significant power.

2.3. Global Scale: Data Centers and AI

Worldwide, data centers are estimated to consume between 1% and 2% of global electricity. As AI workloads increase, so does their share of this consumption. Major cloud providers—Amazon, Microsoft, Google, and others—have built massive data centers with hundreds of thousands of servers, many dedicated to AI.

For instance, Google reported that its total data center electricity use reached approximately 15.8 terawatt-hours (TWh) in 2021. Although not all of this is due to AI, the proportion is rising rapidly as AI becomes central to more services.

- Water Consumption of AI

3.1. Why Water Is Needed

Electricity generates heat, and keeping servers cool is essential to prevent equipment failure. Data centers employ various cooling technologies, many of which rely on water as an efficient heat transfer medium. This water is cycled through the system, absorbing heat from the servers before being cooled and reused or evaporated in cooling towers.

3.2. Quantifying Water Use

The exact amount of water used for AI operations is difficult to isolate, as data centers often report aggregate figures. However, estimates suggest that a medium-sized data center can use hundreds of thousands to millions of gallons of water per day for cooling.

According to recent reports, training a large AI model like GPT-3 can consume direct and indirect water resources equivalent to the drinking needs of hundreds of people over a period of months. Google’s data centers in the U.S. alone consumed over 4.3 billion gallons of water in 2021, much of which is tied to AI and cloud computing workloads.

Water consumption varies based on:

- Cooling Technology: Some data centers use air cooling, which reduces water use but may increase electricity demand.

- Climate: Centers in hot, dry regions tend to use more water to maintain safe temperatures.

- Efficiency Measures: Companies are investing in advanced heat exchangers and closed-loop systems to reduce water footprints.

- The Environmental Implications

4.1. Carbon Footprint

The electricity used by AI often comes, at least in part, from fossil fuel sources. This results in significant greenhouse gas emissions. Studies have estimated that training a large language model can emit as much carbon dioxide as several cars over their entire lifetimes, depending on the energy source.

4.2. Water Scarcity Concerns

Data centers located in water-stressed regions can exacerbate local shortages, raising concerns among communities and policymakers. The competition for clean water is especially acute in drought-prone areas.

- Industry Responses and Solutions

5.1. Renewable Energy Commitments

Major tech companies are investing heavily in renewables to power their data centers. Google, Microsoft, and Amazon have pledged to operate entirely on carbon-free energy within the next decade.

5.2. Efficiency Innovations

The industry is pursuing ways to make AI more sustainable, including:

- Developing more efficient chips and hardware architectures

- Optimizing algorithms to require less computation

- Improving cooling systems to use less water

- Locating data centers in cooler climates or near sources of renewable energy

5.3. Transparency and Reporting

Stakeholders are calling for greater transparency around data center water and electricity use. Some companies now publicly disclose their environmental impacts, enabling consumers and regulators to make informed decisions.

- Future Outlook: Balancing AI Advancement and Sustainability

As AI capabilities expand, so too will their environmental footprints if current trends continue. Policymakers, researchers, and industry leaders must collaborate to ensure that AI advances do not come at unsustainable ecological costs. Promising avenues include:

- Investing in research on energy- and water-efficient AI

- Developing regulations that incentivize sustainable practices

- Educating the public and businesses about the impacts of digital technologies

- Encouraging a circular economy for AI hardware and infrastructure

- Conclusion

Artificial Intelligence is poised to shape the future in profound ways, but its energy and water requirements are non-trivial and growing. The challenge ahead is to harness the power of AI while minimizing its environmental impact—through innovation, transparency, and a shared commitment to sustainability.

By understanding and addressing AI’s resource demands, society can help ensure that technological progress supports not just economic and social goals, but environmental stewardship as well.